5 minutes

Beyond the Hype: Practical Ways AI Is (and Isn’t) Changing Software Delivery

Explore how AI coding assistants are transforming software delivery by taking on repetitive tasks, necessitating a shift in how we train and integrate junior developers into the field amid evolving workflows.

From vibe coding demos that suggest anyone can build the next big SaaS platform using AI coding assistants, to think-pieces declaring the death of junior dev roles, AI’s impact on software delivery has become both over-sold and under-explained. In this article we take a step back from the hype to share what we’re actually seeing on live projects: where generative models really speed things up, where they still trip over their shoelaces, and what that means for teams - from seasoned industry experts to grads hunting their first pull request.

The hype, the hope, and the Stack Overflow slump

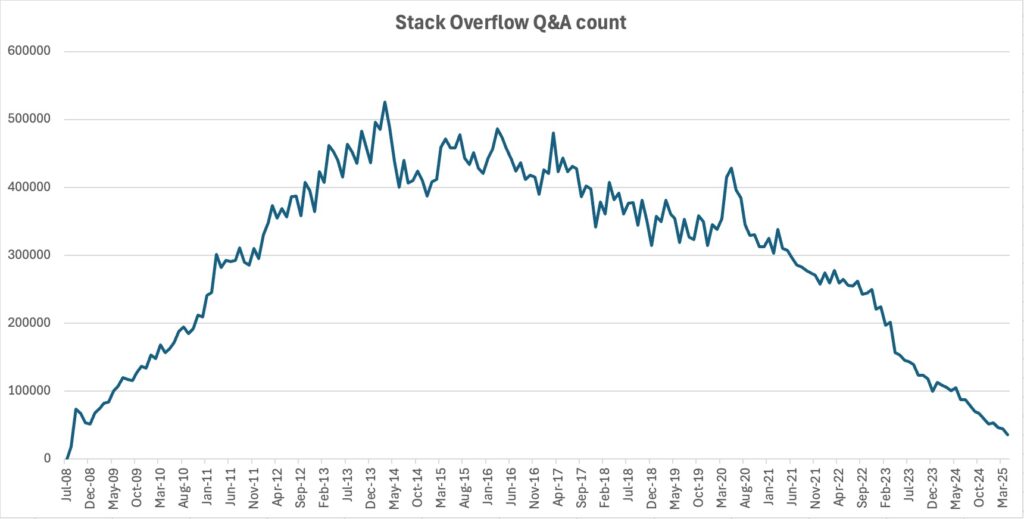

Ever since large language models (LLMs) learned to autocomplete code, headlines have swung between “robots will steal our keyboards” and “Copilot can’t centre a div, nothing to see here.” In the real world, it isn’t so cut and dry. That said, one statistic that was in the news recently does hint that real change is happening: the use of Stack Overflow (the Q&A website developers go to when they get stuck) has gone from growth to contraction – and in a big way.

Source - https://devclass.com/2025/05/13/stack-overflow-seeks-rebrand-as-traffic-continues-to-plummet-which-is-bad-news-for-developers/

Source - https://devclass.com/2025/05/13/stack-overflow-seeks-rebrand-as-traffic-continues-to-plummet-which-is-bad-news-for-developers/

Fewer visits to StackOverflow mean coders are getting their answers somewhere else, and the obvious culprit is AI assistants.

What AI coding tools really excel at (and where they still face-plant)

Revium’s internal trials mirror the industry pattern: LLMs shine at repetitive or highly patterned tasks, stumble on nuance, and always benefit from a sceptical human review.

Some areas where the models earn their keep:

Churning out the boring bits – Think of the code equivalent of filling in name-and-address forms. The model can write that repetitive “plumbing” so developers can focus on the problem-solving.

Quick reality checks on old code – Give it a messy file, or ancient code file and it returns a plain-English summary or highlights lines that might break under odd conditions.

Fast drafts of tests and docs - Feed it a spreadsheet of requirements and it can give you tests and documentation ready to review.

Others where you still need the human touch:

It sometimes just makes things up – The model can reference tools that don’t exist or suggest shortcuts that open security holes, mistakes a seasoned developer would spot.

It can’t see the whole picture – Big corporate systems are larger than the chunk of code the AI can hold in its head (for now), so it may miss important connections.

Garbage in, garbage out – Vague or misleading instructions lead to equally vague or wrong code; unlike a human, the model won’t ask clarifying questions.

How Revium is actually using AI on live projects

We’re past the exploration phase and we do use AI in our workflows these days, albeit with limited scope and plenty of oversight.

1. Inline coding assistance

Developers run GitHub Copilot inside VS Code or Visual Studio. When needed, the AI drafts methods, flags linter errors, and even suggests SQL queries. Experience has taught us to treat it like an enthusiastic intern: great for first drafts, never for second opinions. Every line still goes through standard human peer review process.

2. Automated code-level testing

OpenAI Codex ingests a BA’s test spreadsheet and reviews if the code will pass all the test cases and produces a summary of code that requires an update. Coverage jumps, boring edge-case hunts disappear, and the BA can focus on user-journey testing instead of nit-picking API responses.

3. Request-impact analysis

Faced with an add-on feature request, a prompt to Codex returns a report listing affected modules, database migrations and external integrations. Developers then sanity-check the list and can pull together an estimate much faster than doing it manually.

None of these steps run unattended in production. Each has a clearly defined human checkpoint where a developer reads, rejects or refines the AI output before it merges the outcome into production.

Four lessons from the trenches

Pick your battles. Legacy refactors? Yes. Core algorithm design? Still a human gig.

Prompt once, review twice. We version-control our prompts the same way we track code.

Governance isn’t optional. We redact PII, keep client IP out of public endpoints, and audit model outputs for licensing red flags.

Share the playbook. Standard prompts and usage patterns spread best practice faster, avoid duplication and maintain standards across the team.

The junior-developer dilemma

So what does this mean for junior developers? If an AI can crank out the easy stuff, where do graduates earn their stripes?

Right now the whole industry is scratching its head. Entry-level coding has traditionally been a rite of passage: you start by fixing typos and wiring database calls, then, after enough late-night debugging sessions you graduate to architecture chats. If AI removes that first rung, the ladder looks worryingly tall and slippery.

No one has a complete answer yet, but a few ideas are bubbling up:

Re-invent the “grunt work.” Instead of writing boiler-plate, juniors could review AI-generated code, flag security gaps, and practise explaining why a line is wrong—a skill every senior dev needs.

Structured mentoring beats accidental osmosis. With less repetitive work to hand out, teams may need deliberate mentoring programs, code-review shadowing, paired problem-solving and rotation through live incident response – all to replace the old “learn by doing” model.

Sandbox projects matter more than ever. Hack-days, open-source contributions and internal labs give newcomers a safe place to break things (and fix them) without relying on production ‘busywork’ that the AI now handles.

Ultimately, finding space for emerging talent is a shared responsibility across educators and employers. We don’t have the perfect blueprint yet, but acknowledging the gap is the first step toward designing a better on-ramp for the next generation of developers (or whatever that job becomes in the next 5 – 10 years).

So, is AI replacing developers?

For now, no. Well at least not in our repos.

What’s changing is the shape of the work. AI in software development is more of a support act, helping with various tasks in confined ways and augmenting the work our experienced development team handles day to day. The outcome so far: cleaner code, less time spent on frustrating and mundane work, and more brain space for the thorny problems no autocomplete can solve.

Of course the space is evolving and it will be a constant focus for us over the coming years to adapt and adjust our approach to integrate AI into our workflows where it makes sense to, just like we have been doing up to now.